“The ChatGPT moment for physical AI is here — when machines begin to understand, reason and act in the real world. Robotaxis are among the first to benefit.” Nvidia CEO, Jensen Huang, said during a keynote address at CES 2026.

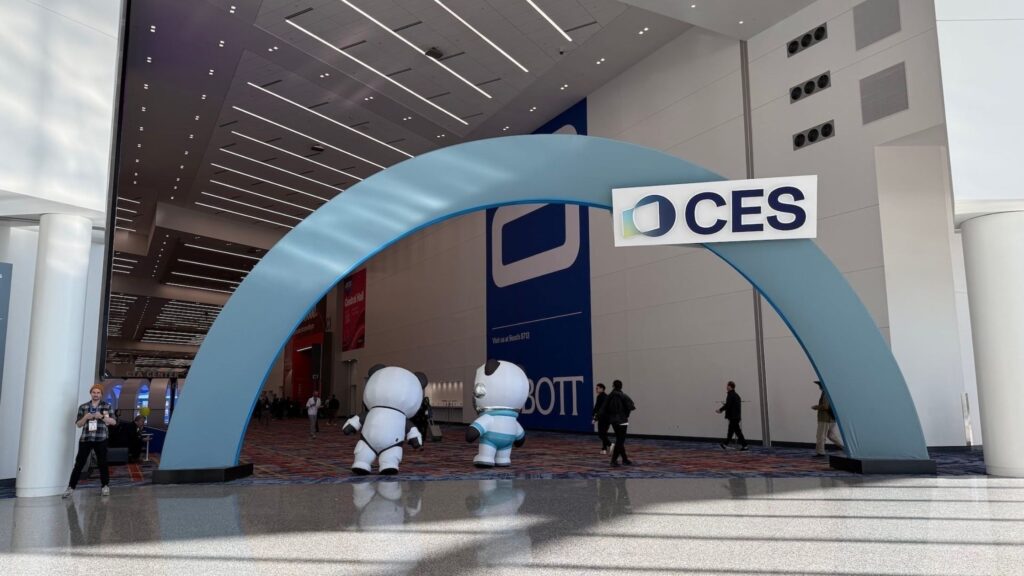

The 2026 Consumer Electronics Show (CES 2026) that took place in Las Vegas was a humdinger of an experience. For the first time, the conversation shifted from if autonomous vehicles would arrive to how soon they would reshape our daily lives. At the heart of this transformation lies a concept called “physical AI”—artificial intelligence that doesn’t just process information but actively perceives, reasons about, and navigates the three-dimensional world around us.

Understanding Physical AI: The Foundation of Tomorrow’s Vehicles

Physical AI in autonomous vehicles refers to AI systems that perceive, reason, and act in real-world environments, enabling cars to anticipate human behavior rather than simply react to it. CES 2026 marked the first large-scale commercial deployment of this paradigm.

The age of thinking cars has quietly begun.

During his keynote address, Jensen Huang, Nvidia’s CEO, described the new technology as similar to ChatGPT’s breakthrough for language understanding. Just as large language models (LLMs) transformed how machines process text by grasping context and generating coherent responses, physical AI enables vehicles to comprehend the complexities of real-world driving scenarios with human-like reasoning.

Traditional AV stacks answered questions like:

Is that an object?

Is it a pedestrian or a cyclist?

Physical AI answers deeper, human-like questions:

Is that pedestrian distracted?

Are they likely to step into traffic?

Should the car slow preemptively rather than reactively?

Nvidia’s Alpamayo: Teaching Cars to Think

The star of CES 2026’s autonomous vehicle showcase was undoubtedly Nvidia‘s Alpamayo, an open-source family of AI models that Huang described as “the world’s first thinking, reasoning autonomous vehicle AI. Alpamayo is trained end-to-end, literally from camera-in to actuation-out.”

The Architecture of Reasoning

What makes Alpamayo revolutionary isn’t just its processing power; it’s the fundamental approach to how autonomous vehicles interpret their environment. Traditional autonomous driving stacks operate in stages: perception identifies objects, planning determines routes, and control executes maneuvers. Each stage operates somewhat independently, creating potential gaps in understanding.

Alpamayo collapses these differences. The system takes in live camera footage and directly decides how the car should steer, brake, or accelerate using one integrated AI model trained on many real-world driving situations.

Previous AV systems focused heavily on perception accuracy. Alpamayo focuses on intent and contextual prediction.

Instead of:

“That is a pedestrian.”

Alpamayo reasons:

“That pedestrian is looking at their phone, near a curb, during a green light phase; they may step out.”

Key technical shifts include:

- End-to-end training from raw camera input to vehicle actuation

- Unified reasoning across perception, planning, and control

- Open-source availability to accelerate industry-wide adoption

“There’s no question in my mind now that this is going to be one of the largest robotics industries,” Jensen Huang said about autonomous driving. “Our vision is that someday every single car, every single truck will be autonomous.”

Huang also revealed that Alpamayo would ship commercially through a major OEM partnership:

“The new Mercedes-Benz CLA will feature Nvidia’s driver assistance software in our first entire stack endeavor.”

A live demo showed the CLA autonomously navigating San Francisco streets, yielding to pedestrians and executing complex turns, behavior that showed anticipation rather than reaction. The U.S. launch is slated for Q1 in 2026. This marks one of the first real-world deployments of physical AI in autonomous vehicles at scale.

Uber × Lucid × Nuro: The Robotaxi as a Product

One of the most interesting reveals at CES 2026 was the three-way partnership between Uber, Lucid, and Nuro. The three companies are coming together to launch a robotaxi in 2026, and the vehicle is production-ready.

Lucid provided the vehicle: their Gravity SUV. Nuro supplied the autonomous driving system (Level 4 autonomy), and Uber designed the cabin and the app interface.

The most eye-catching feature is the roof-mounted “halo” module. It serves two purposes.

First, it houses sensors for complete 360-degree awareness. It includes backup systems for safety-critical functions. Unlike clunky sensor arrays on other autonomous cars, the halo looks nicely done.

Second, it solves a real problem: How do you find your robotaxi in a crowd of identical vehicles?

The halo has external LED lights that display patterns and colors. When you request a ride, your specific car might pulse with your chosen color. You can spot it from far away. It’s a simple solution to a problem most companies overlook.

Tensor’s “Personal” Level 4 Car

Most Level 4 vehicles at CES 2026 were designed for fleets, but Silicon Valley startup Tensor introduced a personal Level 4 AV positioned for private ownership. Marketed as the Tensor Robocar, it’s a self-driving vehicle with a tremendous tech stack.

The standout feature is a retractable or disappearing steering wheel made with safety supplier Autoliv. When the car drives itself, the steering wheel folds completely into the dashboard. The driver’s seat becomes a lounge space. The seat swivels slightly. The footwell extends. Screens pop up for entertainment or work. It’s the world’s first foldable steering wheel.

In no distant time, your personally owned Level 4 car could earn money when you’re not using it. It drops you at work, picks up ride-hailing fares all day, then returns to get you in the evening.

The Big Players: Hyundai, Waymo, and Zoox

The established companies used CES 2026 to show how far they’ve come.

Hyundai Motor Group: Winning Big

Hyundai had the largest booth at CES 2026. They won “Best of CES 2026” and “Best of Innovation” awards.

Their main attraction was the Ioniq 5 robotaxi. But they also showed their Atlas humanoid robot. The connection between both technologies was intentional.

The robotaxi and the robot both use physical AI. Both need to perceive the real world, reason about it, and act. Hyundai sees autonomous vehicles as one piece of a larger physical AI platform. It’s nothing short of smart long-term thinking.

Waymo: The Experienced Operator

Waymo’s CES 2026 presence centered on the Ojai, its Zeekr‑built, sixth‑generation robotaxi, and the broader plan to move from a handful of dense markets to more than 20 U.S. cities. They already run commercial robotaxi services in multiple cities. Their booth showed both their current Jaguar I-Pace fleet and the Zeekr platform.

The Zeekr is purpose-built for autonomy. The sensor mounting integrates seamlessly. The interior utilizes passenger space without driver controls. Everything is optimized for driverless fleet operation.

Waymo has a clear data advantage over competitors. They’ve driven tens of millions of autonomous miles in diverse conditions. More driving creates better data, and better data improves the AI. While others showed prototypes, Waymo demonstrated working vehicles picking up real passengers on regular routes.

Zoox: Live Demos on the Strip

Amazon’s Zoox took their demonstration to the Las Vegas Strip. For the first time, CES attendees could ride in their bidirectional robotaxi as part of an operational service.

The Zoox vehicle is unique. It has no front or back. It drives equally well in both directions. The interior seats face each other, creating a social space.

Riding in a Zoox on Las Vegas Boulevard proved that Level 4 autonomy can work just about anywhere. The vehicle handled pedestrians, delivery trucks, ride-share drivers, and tourists with ease. Attendees said riding in the Zoox felt surprisingly normal.

Ford’s Realistic Timeline: Eyes-Off by 2028

Ford stood out at CES 2026 by being honest about its timeline. Instead of promising robotaxis next year, they announced a 2028 target for Level 3 “eyes-off” highway driving.

Ford’s approach differs from competitors. Many companies keep adding sensors: more cameras, more lidar, more radar. Ford is focusing on centralized computing and smarter software instead. Their engineers believe sensor overload hits a point of diminishing returns. More cameras don’t help if your AI can’t make sense of what they see.

According to Ford executives, the company is targeting full hands‑off, eyes‑off driving on specific highway segments by 2028, built into a new $30,000 UEV platform. It also plans to move to a centralized computing architecture—a “single brain” that is more powerful, smaller (around 44% smaller in some modules), and less costly than current ECUs—rather than adding more disparate controllers and sensors

Ford leaders say that the Level 3 system will still use lidar, moving away from the camera‑only philosophy that Elon Musk apparently champions for the Tesla Robotaxi.

What CES 2026 Tells Us About the Future

CES 2026 was an eye-opener. The autonomous vehicle industry has moved past research into real commercialization. The conversations are about timelines, business models, and getting people to trust the technology.

The biggest trend now is how different technologies are coming together—physical AI in autonomous vehicles. The new tech stack combines computer vision, neural networks, sensor fusion, behavior prediction, and split-second decision-making. Nvidia’s Alpamayo embodies this perfectly.

The bottomline is, technology alone isn’t enough. Autonomous vehicles must be safe AND be perceived as safe.

CES 2026 showed an industry on the edge of real commercial success. The pieces are in place: reliable sensors, sophisticated AI that reasons about complex scenarios, purpose-built vehicles, and business models that make sense.

You May Also Like:

Will Self-Driving Cars Replace Human Drivers Anytime Soon?

Do You Really Know How to Operate Your Semi-Autonomous Vehicle?

I’m Dr. Brandial Bright, also known as the AVangelist. As a dedicated and passionate researcher in autonomous and electric vehicles (AVs and EVs), my mission is to educate and raise awareness within the automotive industry. As the Founder and Managing Partner of Fifth Level Consulting, I promote the adoption and innovation of advanced vehicle technologies through speaking engagements, consulting, and research as we progress to level 5 fully autonomous vehicles.