Advanced Driver Assistance Systems (ADAS) are not only transforming how we drive today, but they are also the crucial stepping stones towards the future of a driverless system. The ADAS technology has already become a staple in many of our cars, transforming the way we navigate our daily commutes and road trips. However, ADAS in autonomous vehicles promises an even more thrilling, safer, and smarter driving experience.

Imagine your car gently nudging you back into your lane before you even realize you’ve drifted, or slamming on the brakes a split second before a pedestrian steps out into the road. That’s the power of the ADAS technology (predictive AI) in action, with companies like Waymo, Zoox, Aurora Innovation, and May Mobility leading the charge.

What is ADAS and How Does It Work?

At its core, Advanced Driver Assistance Systems (ADAS) involves the integration of sensors, processing units, and actuators that work together to perceive the vehicle’s surroundings, analyze data, make informed decisions, and execute actions – all with the aim of preventing accidents, improving traffic flow, and ultimately, reshaping the way we travel. As Elon Musk, CEO of Tesla, aptly puts it, “Autonomous cars are going to be the biggest revolution in transportation since the invention of the airplane.”

Picture your car with superhuman vision, able to see in the dark, through fog, and even around corners. No, this isn’t science fiction; it’s the reality of sensor fusion, where a combination of radar, lidar, cameras, and other sensors work together to give your car a 360-degree view of the world.

The Evolution of ADAS in Autonomous Vehicles

The history of ADAS can be traced back to rudimentary safety features such as anti-lock braking systems (ABS) and electronic stability control (ESC) in the 1970s and 1980s. However, the true acceleration of ADAS development occurred in the 21st century, spurred by advancements in sensor technology, computing power, and machine learning algorithms.

Early ADAS implementations focused on basic driver assistance, with features like adaptive cruise control (ACC) and lane departure warning (LDW) offering convenience and a measure of safety improvement. However, technology has rapidly progressed, and so has the ADAS system.

Now we have more sophisticated sensors, such as radar, lidar, and high-resolution cameras enabling ADAS systems to perceive the environment with greater accuracy and detail. This has allowed for real-time analysis of vast amounts of sensor data, enabling complex decision-making processes and autonomous behaviors in self-driving cars.

Some modern ADAS features in autonomous vehicles include automatic emergency braking (AEB), blind spot monitoring (BSM), and even partially automated driving functions like traffic jam assist. These systems represent a significant step towards higher levels of autonomy, where the vehicle can handle certain driving tasks with little to human intervention. A prime example of this is Tesla’s Autopilot system, which has demonstrated impressive capabilities in navigating highways, changing lanes, and even parking autonomously. Other impressive examples are the fully driverless cars produced by tech giants like Waymo (Alphabet), Zoox (Amazon), Cruise (General Motors), etc.

When Did ADAS First Appear in the United States?

While some precursors to modern ADAS systems existed earlier, ADAS as we understand it today began to emerge in the United States in the early 2000s.

According to Car and Driver:

“Per Greg Smith Equipment, the early ADA systems began gaining popularity in the United States in the early 2000s, including:

- 2000 Cadillac Deville – Night Vision (NV)

- 2000 Toyota – Dynamic Laser Cruise Control (ACC)

- 2004 Infinity FX – Lane Departure Warning (LDW)

- 2006 Lexus LS – Lane Keeping Assist (LKA)

- 2007 Audi – Lane Assist (LDW)

- 2008 GM – Lane Departure Warning (LDW)”

Benefits of ADAS in Autonomous Vehicles

The benefits of ADAS in autonomous vehicles are vast and far-reaching. Here’s a closer look at some of the most promising ones:

- Unprecedented Safety: Numerous studies have demonstrated the efficacy of ADAS in reducing accidents. For instance, a report by the Insurance Institute for Highway Safety (IIHS) found that vehicles equipped with AEB experienced a 50% reduction in rear-end collisions. Similarly, the European Commission estimates that ADAS technologies could prevent up to 140,000 serious road injuries by 2038.

- Enhanced Efficiency: ADAS features like ACC and lane keeping assist not only contribute to safety but also optimize fuel consumption and reduce traffic congestion. A study by the University of Michigan Transportation Research Institute (UMTRI) revealed that vehicles equipped with ACC could achieve fuel savings of up to 10%.

- Improved Accessibility: ADAS holds the promise of making driving more accessible to individuals with disabilities, older adults, and those with limited driving experience. Features like self-parking and automated lane changes can significantly ease the burden of driving for these populations.

- Transformative Mobility: The ultimate goal of ADAS is to pave the way for fully autonomous vehicles, which is revolutionizing transportation as we know it. Self-driving cars have the potential to eliminate human error, reduce traffic accidents, transform urban planning, and offer new mobility solutions for those who cannot drive themselves.

In essence, ADAS represents a pivotal technological advancement that is fundamentally reshaping the automotive landscape. As John Krafcik, former CEO of Waymo, puts it, “We believe that self-driving technology can make our roads safer and our cities more accessible.”

Core Components of ADAS

Here are the core components of ADAS:

Sensors and Cameras: The Eyes of ADAS

Sensors and cameras are fundamental to the operation of ADAS, acting as the ‘eyes’ of the system. These components gather real-time data from the vehicle’s surroundings, which is crucial for safe and efficient driving.

Types of Sensors Used:

- Radar: Radar sensors use radio waves to detect objects and their distances, speeds, and directions. They are particularly useful in poor weather conditions, where visibility is limited. Radar is commonly used for features like Adaptive Cruise Control (ACC) and Forward Collision Warning (FCW).

- Lidar: Lidar (Light Detection and Ranging) sensors emit laser pulses to create high-resolution 3D maps of the environment. This technology provides precise distance measurements and is valuable for identifying objects and understanding their shapes and sizes. Lidar is often used in high-level autonomous systems for accurate environmental mapping.

- Ultrasonic Sensors: These sensors use sound waves to detect objects at close range. They are widely used for parking assistance and obstacle detection, offering a detailed view of the immediate surroundings.

- Cameras: Cameras in ADAS provide visual information, capturing images and videos of the vehicle’s environment. These images are processed to identify objects, lane markings, traffic signs, and other critical elements. Cameras are essential for functions like Lane Keep Assist (LKA), Traffic Sign Recognition, and Blind Spot Detection.

Software and Algorithms: The Brain of ADAS

While sensors and cameras act as the eyes of ADAS, software and algorithms serve as the brain, processing data and making decisions.

AI and Machine Learning in ADAS

Artificial Intelligence (AI) and machine learning are at the core of modern ADAS. These technologies enable systems to learn from data, recognize patterns, and improve over time. Machine learning algorithms analyze vast amounts of data from sensors to identify objects, predict their movements, and assess potential risks. This capability is vital for features like autonomous emergency braking and pedestrian detection.

Real-Time Data Processing and Decision-Making:

ADAS relies on real-time data processing to function effectively. The system must quickly analyze sensor data, identify relevant information, and make split-second decisions. This process involves complex algorithms that prioritize tasks, manage resources, and ensure the vehicle responds appropriately to changing conditions.

Communication Systems in ADAS

Communication systems are another critical component of ADAS, enabling vehicles to share information with each other and with infrastructure.

Vehicle-to-Vehicle (V2V) and Vehicle-to-Infrastructure (V2I) Communication:

V2V communication allows vehicles to exchange information about their positions, speeds, and other relevant data. This capability enhances situational awareness and enables coordinated actions, such as synchronized braking or lane changes. V2I communication involves exchanging data between vehicles and road infrastructure, such as traffic lights, signs, and road sensors. This communication helps optimize traffic flow, reduce congestion, and improve safety.

Core Functions of ADAS in Autonomous Vehicles

Let’s examine the core functions of ADAS in autonomous vehicles:

Adaptive Cruise Control (ACC)

Adaptive Cruise Control (ACC) systems employs an intricate network of sensors, primarily radar and sometimes lidar or cameras, to detect the distance and relative speed of the vehicle ahead. Using complex algorithms, the system calculates the optimal following distance and automatically adjusts the vehicle’s speed to maintain a safe gap.

Advanced ACC Systems: Beyond the basic functionality of maintaining a set speed and distance, advanced ACC systems incorporate stop-and-go capabilities, enabling them to operate in heavy traffic conditions. These systems can bring the vehicle to a complete stop if necessary and resume following the lead vehicle when traffic moves again. Some systems even integrate with GPS navigation data to proactively adjust speed based on upcoming curves or changes in speed limits.

Sensor Fusion for Enhanced Performance: A key trend in ACC development is the fusion of multiple sensor types (radar, lidar, cameras) to create a more robust and reliable system. Combining data from different sensors enables the system to better handle challenging scenarios, such as adverse weather conditions or low-visibility situations.

Lane Keeping Assist (LKA)

Lane Keeping Assist (LKA) systems have evolved significantly from simple lane departure warnings. Today, they utilize a combination of camera-based lane detection and advanced algorithms to not only detect lane markings but also predict the vehicle’s trajectory. This allows LKA systems to intervene proactively, gently steering the vehicle back into its lane if it starts to drift.

Types of LKA: There is a spectrum of LKA implementations, ranging from passive systems that provide visual or audible warnings to more active systems that actively steer the vehicle. Some advanced LKA systems even incorporate haptic feedback, vibrating the steering wheel to alert the driver in semi-autonomous vehicles.

Predictive LKA and Lane Centering: Recent advancements in LKA have introduced predictive capabilities, where the system anticipates upcoming curves or lane changes based on road geometry data and proactively adjusts steering accordingly. Lane centering, a more sophisticated form of LKA, aims to keep the vehicle perfectly centered within the lane rather than just preventing it from crossing lane markings.

Automatic Emergency Braking (AEB)

Automatic Emergency Braking (AEB) is one of the most critical ADAS functions, designed to mitigate or even prevent collisions. AEB systems utilize sensors like radar, lidar, or cameras to detect potential obstacles ahead. If a collision is imminent, the system automatically applies the brakes to reduce the severity of the impact or avoid it altogether.

AEB with Pedestrian and Cyclist Detection: Recent advancements in AEB have incorporated pedestrian and cyclist detection, expanding the system’s capability to protect vulnerable road users. This involves sophisticated algorithms that can distinguish between different types of objects and predict their trajectories.

AEB in Low-Light Conditions: A major challenge for AEB systems is operating effectively in low-light conditions. Some manufacturers are addressing this by incorporating thermal imaging cameras, which can detect heat signatures of pedestrians and animals even in complete darkness.

Blind Spot Monitoring (BSM)

Blind Spot Monitoring (BSM) systems in AVs utilize radar or ultrasonic sensors to detect vehicles in the driver’s blind spots. When a vehicle is detected, the system alerts itself or provides a warning to the driver, typically through a visual indicator in the side mirror or on the A-pillar.

Advanced BSM Features: Some BSM systems go beyond simple warnings and incorporate active intervention features. For example, in semi-autonomous vehicles, if the driver attempts to change lanes while a vehicle is detected in the blind spot, the system may provide a stronger warning, such as steering wheel vibration or even gentle counter-steering to prevent the lane change.

Cross-Traffic Alert: A related feature, rear cross-traffic alert, utilizes the same sensors to detect vehicles approaching from the sides when the vehicle is reversing out of a parking space. This can be particularly useful in situations with limited visibility.

Parking Assistance

Parking assistance utilizes a combination of cameras, ultrasonic sensors, and even lidar to create a 360-degree view of the vehicle’s surroundings. In self-driving cars, the systems can autonomously maneuver the vehicle into a parking space, either parallel or perpendicular.

Remote Parking: A cutting-edge feature of the parking assistance technology is remote parking. Fully autonomous vehicles can park with precision, minimizing the space needed between vehicles and maximizing the number of cars that can fit in a given area

Summoning and Automated Valet Parking: Automotive companies and tech giants are building AVs that can autonomously navigate to a designated pick-up location. Automated valet parking, another sophisticated technology, allows the vehicle to autonomously find a parking space and park itself, potentially even returning to the driver upon request.

You May Also Like:

- Top 20 Autonomous Companies in the U.S. (2024)

- Driverless Technology: Key Components of Autonomous Vehicles (Complete Teardown)

- Driverless Cars Vs Human Drivers: 10 Things Autonomous Vehicles Can Do That Human Drivers Can’t

How ADAS Contributes to the Six Levels of Driving Autonomy

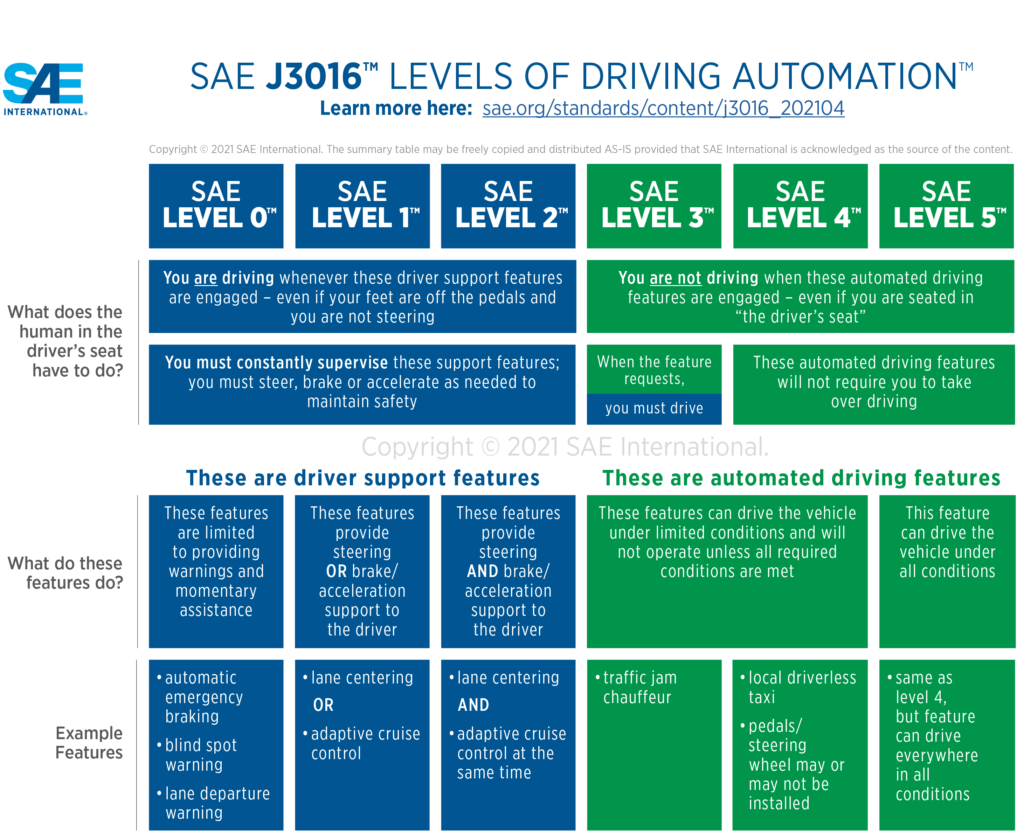

Let’s examine how ADAS features align with the SAE Levels of Driving Automation, a widely recognized framework that categorizes the degrees of vehicle autonomy.

Here is a breakdown of the six levels of driving autonomy, as defined by SAE:

- Level 0 (No Automation): The human driver performs all driving tasks.

- Level 1 (Driver Assistance): The vehicle can assist with either steering or acceleration/deceleration, but not both simultaneously. Examples include Lane Departure Warning (LDW) and Adaptive Cruise Control (ACC).

- Level 2 (Partial Automation): The vehicle can control both steering and acceleration/deceleration simultaneously under specific conditions, such as highway driving. The human driver must remain engaged and ready to take over at any time. Tesla’s Autopilot and GM’s Super Cruise are examples of Level 2 systems.

- Level 3 (Conditional Automation): The vehicle can perform all driving tasks under specific conditions, but the human driver must be ready to take control when requested. The driver can disengage from driving tasks, such as watching a movie, but must be able to respond promptly.

- Level 4 (High Automation): The vehicle can perform all driving tasks under specific conditions without human intervention. The human driver is not required to pay attention, and the vehicle can safely stop if the driver does not respond to a request to intervene.

- Level 5 (Full Automation): The vehicle can perform all driving tasks under all conditions, with no human intervention required. The vehicle may not even have traditional controls like a steering wheel or pedals.

How ADAS Features Contribute to Each Level of Autonomy

Here’s how ADAS features contribute to each level of driving autonomy:

- Level 1: Basic ADAS features like Lane Departure Warning (LDW), Forward Collision Warning (FCW), and Automatic Emergency Braking (AEB) assist the driver but still require full human engagement.

- Level 2: More advanced ADAS features, such as Adaptive Cruise Control (ACC) with lane centering and Traffic Jam Assist, enable the vehicle to handle some driving tasks, but the driver must remain attentive and ready to take over.

- Level 3: This level requires a comprehensive suite of ADAS features, including advanced sensors like lidar, high-definition maps, and sophisticated AI algorithms to monitor the driving environment and make decisions. The human driver can disengage from driving tasks but must be ready to resume control when prompted.

- Levels 4 and 5: These levels demand a highly complex and redundant system of sensors, processors, and algorithms that can handle virtually any driving scenario. The vehicle must be able to navigate complex environments, interpret traffic signals and signs, and make safe decisions in unpredictable situations.

The Future of ADAS in Autonomous Vehicles

As of 2024, several autonomous vehicle companies including Waymo, Zoox, Cruise, Argo AI, etc., are actively developing and testing Level 4 and Level 5 autonomous vehicles for ride-hailing and delivery services in limited areas. In fact, Waymo recently announced that it has made more than 200,000 paid ride-hailing trips in a week in three cities – San Francisco, Phoenix, and Los Angeles.

Despite these widespread deployment, the future of fully autonomous vehicles (Level 5) still faces significant technical, regulatory, and societal challenges.

One of the main hurdles is ensuring the safety and reliability of autonomous systems in complex and unpredictable real-world environments. Additionally, there are legal and ethical questions surrounding liability in the event of an accident involving a self-driving car.

Despite these challenges, the rapid advancement of ADAS technology and the increasing investment in autonomous vehicle development suggest that the future of transportation is undoubtedly heading towards greater automation. While the timeline for perfecting full autonomy remains uncertain, the evolution of ADAS is paving the way for a safer, more efficient, and more accessible transportation ecosystem.

I’m Dr. Brandial Bright, also known as the AVangelist. As a dedicated and passionate researcher in autonomous and electric vehicles (AVs and EVs), my mission is to educate and raise awareness within the automotive industry. As the Founder and Managing Partner of Fifth Level Consulting, I promote the adoption and innovation of advanced vehicle technologies through speaking engagements, consulting, and research as we progress to level 5 fully autonomous vehicles.