Table of Contents

Imagine a world where cars drive themselves, freeing you from the mundane task of navigating through traffic. Also, imagine a world where children, the disabled and the elderly could, in theory, travel without a driver. This would greatly increase accessibility. Autonomous vehicles (AVs) promise to revolutionize transportation, offering safer, more efficient travel. For example, over 40,000 people died in road crashes in the United States in 2022 and 2023, according to data presented by the NHTSA. With the majority of accidents being the result of human error, even a 90% adoption rate of driverless cars could translate into thousands of lives being saved every year.

But what exactly makes these self-driving marvels tick?

The functionality of autonomous vehicles largely depends on a branch of artificial intelligence (AI) and machine learning (ML) known as deep learning. But what are the components of this model and how does it work?

In this post, we’ll explore the core components of autonomous vehicles, breaking down the intricate systems and technologies that enable them to operate independently.

What is an Autonomous Vehicle and How Does It Work?

An autonomous vehicle (AV), also known as a self-driving car, is a vehicle capable of driving itself without human input. That is to say, without a driver on the steering wheel. Autonomous vehicles are designed to go anywhere a traditional car goes and do everything an experienced human driver does.

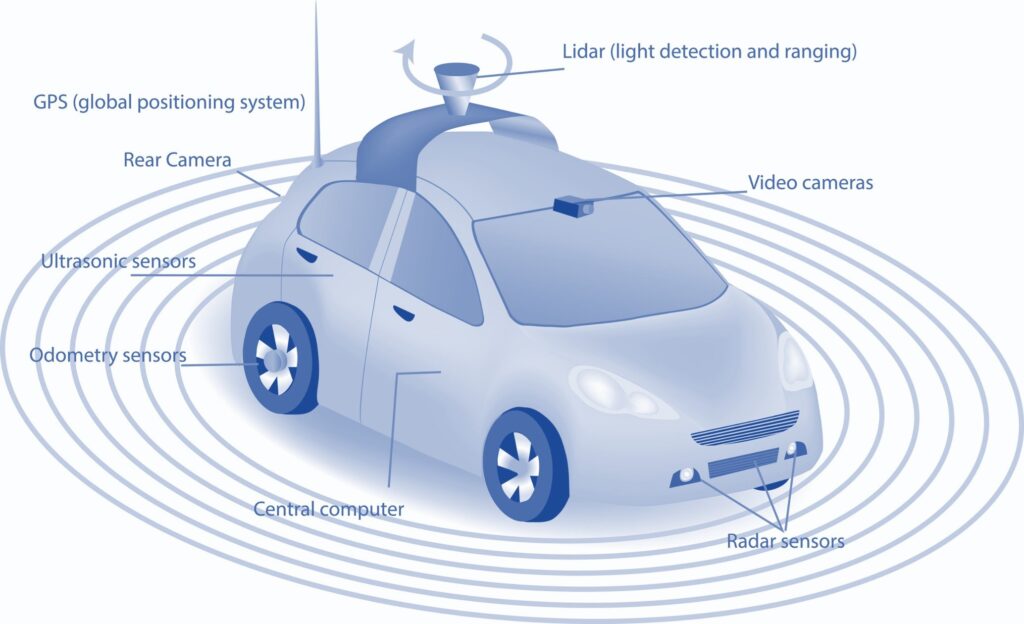

There are 6 levels of driving automation, as defined by the Society of Autonomous Engineers (SAE), ranging from Level 0 (no automation) to Level 5 (full automation).

See the breakdown in the diagrams below:

Most AVs currently on the road are at Level 2 or 3, which means they still require some human supervision. Think of Tesla’s “Autopilot,” a semi-autonomous (level 2 and 3) self-driving functionality. However, some companies like Waymo, Zoox, Motional, Cruise, etc., are developing and testing Level 4 and 5 AVs, which would be able to operate completely without human intervention.

Core Components of Autonomous Vehicles

Let’s carefully dissect the core components of autonomous vehicles:

Perception Systems

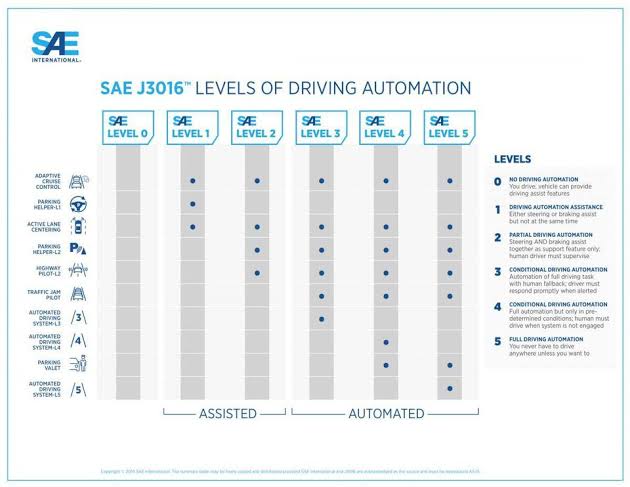

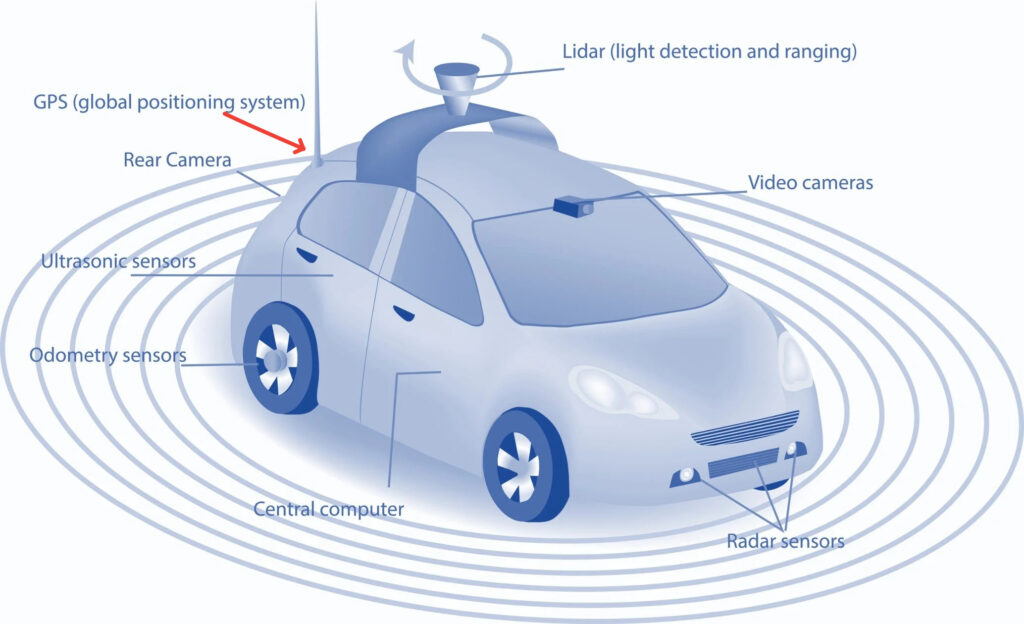

Sensors

LiDAR (Light Detection and Ranging)

LiDAR sensors are like the eyes of an autonomous vehicle. They are usually found atop AVs. They work by emitting laser beams and measuring the time it takes for the light to bounce back from objects. This process creates a precise 3D map of the environment. LiDAR is essential for detecting and classifying objects such as pedestrians, cyclists, and other vehicles. LiDAR sensors also play a critical role in obstacle detection and avoidance for AVs.

LiDAR Sensor Use Cases

Precise 3D Mapping: LiDAR sensors emit laser pulses and measure the time it takes for those pulses to bounce back from objects. This data is used to create highly detailed 3D maps of the surrounding environment, including roads, obstacles, and other vehicles. The accuracy of these maps is crucial for safe navigation and decision-making.

Object Detection and Tracking: By analyzing the reflected laser data, LiDAR can accurately detect and track objects in real time. This allows autonomous vehicles to identify pedestrians, cyclists, animals, and other vehicles on the road, even in challenging conditions like low light or adverse weather.

Distance Measurement: LiDAR sensors are exceptionally good at measuring distances to objects. This information is essential for maintaining safe distances, avoiding collisions, and making informed decisions about when to accelerate, brake, or change lanes.

Path Planning and Navigation: The 3D maps and object data provided by LiDAR are used by autonomous vehicle algorithms to plan safe and efficient paths. This includes determining the optimal route, predicting the movements of other road users, and avoiding obstacles.

By accurately identifying and classifying obstacles, such as stationary objects, debris on the road, or unexpected changes in road conditions, LiDAR sensors enable AVs to plan and execute safe maneuvers to avoid collisions.

LiDAR Sensor Limitations

Cost: High-resolution LiDAR sensors are expensive, making them a significant factor in the overall cost of autonomous vehicles.

Limited Range: The range of LiDAR sensors is limited compared to radar, especially in adverse weather conditions like heavy rain or fog. This can affect the vehicle’s ability to detect distant objects.

Complexity: LiDAR systems require complex algorithms and processing power to interpret the vast amounts of data they generate, which can be a challenge for real-time decision-making.

Vulnerability to Interference: LiDAR can be affected by interference from other LiDAR sensors or bright light sources.

Although the LiDAR technology is widely popular in the AV industry, some autonomous vehicle manufacturers do not rely on it. A striking example is Elon Musk, who has made a deliberate decision not to use the LiDAR technology in Tesla’s AV fleet, rather opting for alternative neural network approaches.

LiDAR sensors are often integrated with other sensor technologies, such as cameras, radar, and GPS, to create a comprehensive perception system for AVs.

Radar (Radio Detection and Ranging)

Radar uses radio waves to detect objects and measure their distance and speed. It’s particularly useful for collision avoidance and adaptive cruise control because it can see far ahead and through poor weather conditions. Radar sensors can be found in the bumpers of AVs. Its superpower lies in its ability to see through obstacles that challenge other sensors. It’s like having X-ray vision, allowing the vehicle to detect objects even in adverse weather conditions like fog, rain, or snow. This makes radar an invaluable asset for ensuring safety on the road, regardless of the weather’s mood swings.

Radar Sensor Use Cases

Weatherproof: Unlike camera sensors, which can be blinded by glare or bad weather, and lidar, which can be affected by rain or snow, radar sensors are pretty much weatherproof. They can see through fog, rain, and even light snow, making them reliable in all sorts of conditions.

Long-range detection: Radar sensors can detect objects at a greater distance than cameras and some lidar systems. This gives autonomous vehicles more time to react to obstacles or changes in traffic flow.

Accurate speed measurement: Radar sensors are exceptionally good at measuring the speed of objects, including other vehicles, pedestrians, and cyclists. This information is vital for autonomous vehicles to make safe decisions, like when to brake or change lanes.

Radar Sensor Limitations

Lower resolution: While radar sensors can detect objects, they don’t provide as detailed a picture as cameras or lidar. This can make it harder for autonomous vehicles to identify the exact type of object they’re seeing.

Vulnerable to interference: Radar sensors can sometimes be affected by interference from other radar systems or electronic devices. This can lead to false readings or missed detections.

Limited field of view: Radar sensors typically have a narrower field of view than cameras and lidar. This means they might miss objects that are off to the side or behind the vehicle.

Despite their limitations, radar sensors are an essential part of the sensor suite in autonomous vehicles. They provide critical information that complements the data from cameras and lidar, helping to create a more complete picture of the vehicle’s surroundings.

Cameras

Cameras provide rich visual information, capturing images of the environment just like a human eye. They are crucial for tasks like lane detection, traffic sign recognition, and object identification. Camera sensors are like your windows to the world, giving you a panoramic view of your surroundings. They come in various shapes and sizes, strategically placed all around the vehicle—on the roof, bumpers, sides, and even inside.

Camera Sensor Use Cases

Sight Beyond Human Eyes: They can see in a much wider spectrum than humans, including infrared light, which helps them detect objects even in low-light conditions.

High-Resolution Imaging: Thanks to their advanced technology, camera sensors capture incredibly detailed images, allowing the AV to identify even the tiniest details of the road and its environment. For example, traffic signs, lane markings, pedestrians, etc.

Wide Field of View: They offer a broad perspective of the surroundings, minimizing blind spots and maximizing awareness.

Relatively Low Cost: Compared to other sensor technologies like LiDAR, cameras are more affordable, making them a practical choice for widespread adoption in AVs.

Camera Sensor Limitations

Weather Sensitivity: Rain, fog, snow, or even glare can impair their vision, making it difficult for them to see clearly.

Night Vision Challenges: While they perform better than human eyes in low light, they still have limitations in complete darkness.

Distance Estimation: Cameras alone may not be as accurate in estimating the distance of objects as other sensors like LiDAR.

To overcome these weaknesses, AVs rely on a combination of sensors—cameras, LiDAR, radar, ultrasonic sensors—each with its own strengths. By fusing the data from these different sources, the AV gains a more comprehensive and accurate understanding of its environment.

Ultrasonic Sensors

Ultrasonic sensors (USS) play a crucial role in autonomous vehicles, particularly in near-field perception for tasks like parking, low-speed maneuvering, and obstacle avoidance. They operate by emitting high-frequency sound waves and measuring the time it takes for these waves to bounce back after hitting an object. This information is then used to calculate the distance to the object. Ultrasonic sensors are usually found on the front and rear bumpers.

Ultrasonic Sensor Use Cases

Near-Field Perception: USS are specifically designed for short-range detection, typically up to a few meters. This makes them ideal for detecting obstacles in tight spaces and during low-speed maneuvers like parking.

Low-Speed Maneuvering: USS assist in obstacle avoidance and navigation in tight spaces at low speeds.

Collision Avoidance: USS provide an additional layer of protection by detecting obstacles in close proximity to the vehicle and alerting the system to take necessary actions.

Ultrasonic Sensor Limitations

Limited Range: The effective range of USS is limited, usually to a few meters. This makes them unsuitable for detecting obstacles at longer distances, where other sensors like radar and LiDAR are more effective.

Sensitivity to Soft Materials: USS are less effective at detecting soft or sound-absorbing materials like cloth or foam, as these materials absorb the sound waves instead of reflecting them back.

Susceptibility to Interference: USS can be affected by interference from other ultrasonic devices, such as those used in parking assistance systems of nearby vehicles.

Limited Information: USS primarily provide information about the distance to an object. They do not offer detailed information about the object’s shape, size, or velocity, which can be obtained from other sensors like LiDAR or radar.

Ultrasonic sensors are a valuable component in the sensory suite of autonomous vehicles due to their affordability, robustness, and effectiveness in near-field perception. While they have limitations in terms of range and the type of information they provide, their complementary role alongside other sensors contributes to the overall safety and reliability of autonomous vehicles.

The combining of multiple sensors with the goal of helping AVs sufficiently understand their environment is known as sensor fusion.

You May Also Like:

- Top 20 Autonomous Vehicle Companies in the U.S. (2024)

- The Cruise Debacle and How They Regained Cruise Control

Localization and Mapping Systems

GPS (Global Positioning System)

GPS (Global Positioning System) is a critical component of autonomous vehicles (AVs), playing a fundamental role in enabling their safe and efficient navigation. Its uniqueness lies in its ability to provide precise and continuous location data, which is essential for AVs to understand their environment and make informed decisions.

GPS Uses Cases

Localization and Mapping: GPS provides AVs with accurate information about their latitude, longitude, and altitude. This information is used in conjunction with detailed maps to pinpoint the AV’s exact position on the road and within its surroundings. Precise localization is crucial for tasks like lane keeping, obstacle avoidance, and route planning.

Navigation and Path Planning: GPS helps AVs determine their current location relative to their intended destination. By continuously tracking their position, AVs can calculate the optimal path to reach their goal, taking into account factors like traffic, road conditions, and speed limits. This enables efficient and safe navigation.

Sensor Fusion: GPS data is integrated with data from other sensors like LiDAR, radar, and cameras. This sensor fusion process allows AVs to create a comprehensive understanding of their environment. While other sensors provide information about nearby objects, GPS helps put that information into the context of the overall map, ensuring the AV’s perception of its surroundings is accurate and consistent.

Vehicle-to-Vehicle (V2V) and Vehicle-to-Infrastructure (V2I) Communication: GPS facilitates communication between AVs and other vehicles, as well as with infrastructure like traffic lights and road signs.

GPS Limitations

Signal Disruptions: GPS signals can be disrupted by obstacles like tall buildings, tunnels, and dense foliage. This can lead to temporary loss of positioning information, which can be dangerous for AVs if they rely solely on GPS for navigation.

Spoofing and Jamming: GPS signals are vulnerable to spoofing (falsifying location data) and jamming (blocking signals). These malicious attacks can mislead AVs and compromise their safety.

Inaccurate in Dense Urban Environments: The accuracy of GPS can degrade in urban canyons due to multipath reflections, where signals bounce off buildings and other structures. This can lead to errors in position estimation.

To address some of these limitations, AVs use multiple sensors in addition to GPS, such as LiDAR, radar, and cameras. This provides redundancy and ensures that if GPS signals are lost, the AV can still navigate safely using other data sources. Also, when GPS signals are unavailable, inertial navigation system (INS) can be used to provide short-term positioning information.

INS uses accelerometers and gyroscopes to track the AV’s movement. AVs can also be equipped with technologies to detect and mitigate spoofing and jamming attacks.

HD Maps (High-Definition Maps)

HD maps are incredibly detailed, containing information about lane structures, road geometry, traffic signs, and more. They are vital for precise navigation and path planning. These maps need regular updates to remain accurate and require significant data storage, but they offer unparalleled precision.

HD Maps Use Cases

Precise Localization: HD Maps provide extremely accurate positional information, often down to the centimeter level. This allows autonomous vehicles to precisely determine their location on the road, even in challenging conditions like tunnels or dense urban environments where GPS signals may be weak or unreliable.

Detailed Road Information: HD Maps contain a wealth of information about the road network, including lane markings, road boundaries, traffic signs, traffic lights, speed limits, and even the elevation profile of the road. This detailed information helps autonomous vehicles understand the road layout and make informed decisions about lane changes, turns, and other maneuvers.

Enhanced Perception: HD Maps can be fused with data from onboard sensors like cameras, lidar, and radar to improve the perception of the environment. This fusion of map and sensor data allows autonomous vehicles to better detect and track objects, predict the behavior of other road users, and plan safe trajectories.

Predictive Capabilities: This mapping technology can include dynamic information about temporary road conditions, such as construction zones, accidents, or lane closures. This information helps autonomous vehicles anticipate potential hazards and adjust their behavior accordingly, enhancing safety and avoiding delays.

Path Planning and Decision Making: HD Maps provide a framework for path planning and decision-making algorithms in autonomous vehicles. HD Maps algorithms can generate optimal routes, determine safe speeds, and make complex decisions in real time, such as when to merge, change lanes, or overtake.

HD Maps Limitations

Data Collection and Maintenance: Creating and maintaining HD Maps is a complex and resource-intensive process. It requires specialized equipment, such as lidar-equipped vehicles, and continuous updates to keep the maps current as the road environment changes.

Cost: The cost of creating and maintaining HD Maps can be a significant barrier for their widespread adoption.They typically make autonomous vehicles more expensive.

Limited Coverage: HD Maps are currently available only for limited areas, primarily in developed countries and urban centers. Expanding coverage to rural areas and less developed regions remains a challenge.

HD Maps are a crucial component of autonomous vehicle technology, providing essential information for localization, perception, and decision-making.

SLAM (Simultaneous Localization and Mapping)

SLAM enables a vehicle to create or update a map of its surroundings while simultaneously keeping track of its own location. This technology is particularly useful in uncharted or dynamic environments.

SLAM Use Cases

Operate in unknown environments: Unlike GPS, which relies on pre-existing maps and satellite signals, SLAM allows autonomous vehicles to navigate in areas without prior knowledge.

Real-time mapping and localization: SLAM works in real-time, constantly updating the map and the vehicle’s position as it moves. This is essential for safe and efficient navigation.

Robustness: SLAM algorithms are designed to be robust to sensor noise and uncertainties, ensuring accurate localization even in challenging conditions.

SLAM Limitations

Computational complexity: SLAM algorithms can be computationally intensive, requiring significant processing power and potentially impacting the vehicle’s response time.

Drift: Over time, errors can accumulate in SLAM estimates, leading to a phenomenon called “drift.” This can cause the vehicle’s position estimate to become inaccurate.

Sensor limitations: SLAM relies heavily on sensor data. Sensor malfunctions, occlusions, or adverse weather conditions can degrade SLAM performance.

Researchers are continuously working to improve SLAM algorithms, address its limitations, and make it more efficient and reliable. Advances in machine learning and sensor technology are expected to play a significant role in the future development of SLAM for autonomous vehicles.

Planning and Decision-Making Systems

Path Planning

Path planning algorithms determine the optimal route from the current position to the destination, considering traffic, road conditions, and obstacles.

There are two main types of path planning in autonomous vehicles:

Global Path Planning

Purpose: Global path planning focuses on finding the most efficient route from the starting point to the destination. It takes into account the entire map of the environment and considers factors like road networks, traffic patterns, and destination location.

Algorithms: Common algorithms used in global path planning include Dijkstra’s algorithm, A* search, and its variants (D*, LPA*), and graph search algorithms. These algorithms search through the entire map to find the shortest or most optimal path.

Output: The output of global path planning is a high-level route, often represented as a sequence of waypoints or a coarse trajectory. This route serves as a guide for the local path planner.

Challenges: Global path planning can be computationally expensive, especially for large maps. It may also require frequent updates as the environment changes, which can be challenging in real-time scenarios.

Local Path Planning

Purpose: Local path planning focuses on generating a detailed and feasible trajectory for the vehicle to follow in the immediate vicinity. It takes into account local obstacles, road boundaries, lane markings, and the vehicle’s dynamics.

Algorithms: Local path planners often utilize geometric algorithms, such as spline-based methods, Dubins curves, or model predictive control (MPC). These algorithms consider the vehicle’s kinematic and dynamic constraints to generate smooth and safe trajectories.

Output: The output of local path planning is a detailed trajectory, typically represented as a sequence of closely spaced waypoints with associated velocities and accelerations. This trajectory is directly executable by the vehicle’s control system.

Challenges: Local path planning must be computationally efficient to react to rapidly changing environments. It also needs to handle complex scenarios like lane changes, overtaking, and interactions with other road users.

Relationship Between Global and Local Path Planning

Global and local path planning work together in a hierarchical manner. The global planner provides the overall direction and high-level route, while the local planner fills in the details and generates a safe and executable trajectory. The two planners often communicate and exchange information to ensure that the generated path is both globally optimal and locally feasible.

Behavioral Planning

Behavioral planning is a crucial component of autonomous vehicle (AV) decision-making systems, bridging the gap between path planning and control. It focuses on predicting the behavior of other road users and generating safe and socially acceptable maneuvers for the AV. The goal is to ensure that the AV not only reaches its destination efficiently but also interacts smoothly and predictably with other vehicles, pedestrians, and cyclists.

Behavioral planning goes beyond simply avoiding collisions. It strives to make the AV’s behavior socially acceptable and predictable. This includes adhering to traffic rules, signaling intentions clearly, maintaining appropriate distances, and respecting the right of way.

Behavioral planning is an active area of research in the autonomous vehicle field. Researchers are developing new prediction models, decision-making algorithms, and simulation environments to improve the performance and social acceptability of AVs. There is also growing interest in incorporating human-like reasoning and decision-making into behavioral planning.

Computing Hardware

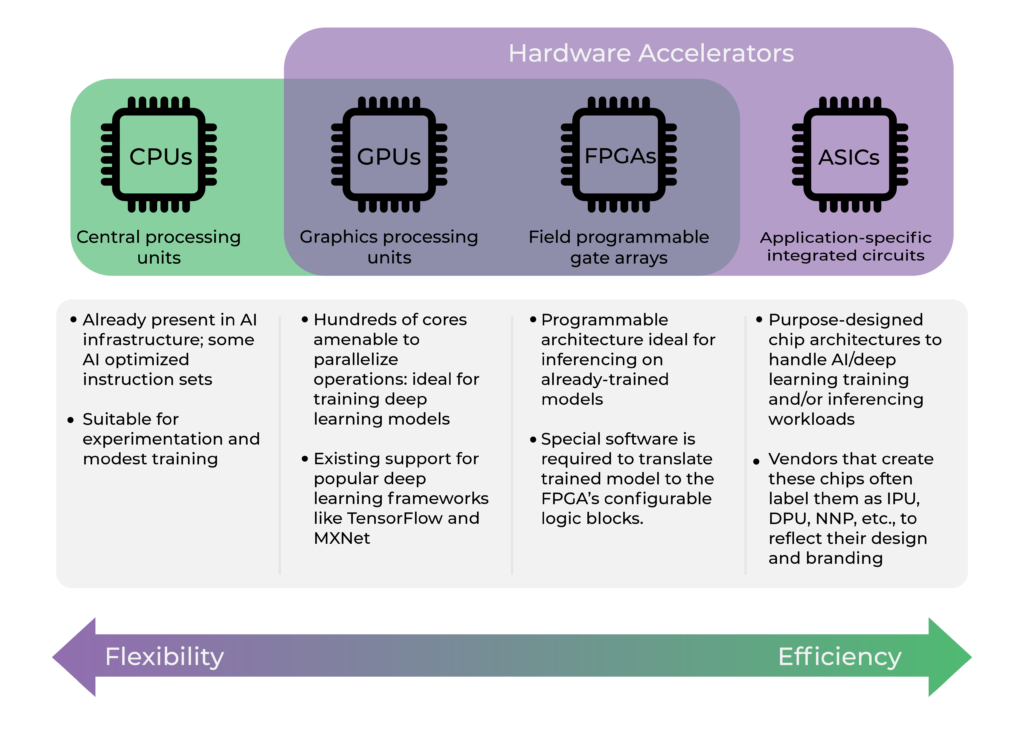

Central Processing Units (CPUs)

The central processing unit (CPU) is the brain of the self-driving system. It processes vast amounts of data from various sensors, makes real-time decisions, and controls the vehicle’s movements. The CPU’s capabilities are crucial for ensuring the safe and efficient operation of AVs. CPUs are the general-purpose processors that handle a wide range of tasks, from running the AV’s operating system to executing decision-making algorithms.

Graphics Processing Units (GPUs)

Graphics Processing Units (GPUs) play a pivotal role in autonomous vehicles (AVs) by accelerating the processing of vast amounts of data generated by various sensors, including cameras, LiDAR, radar, and ultrasonic sensors. Their unique architecture and capabilities make them ideal for handling the computationally intensive tasks involved in perception, planning, and control of AVs.

GPUs boast significantly higher computational power than traditional Central Processing Units (CPUs). This allows them to handle complex algorithms for tasks like object detection, image segmentation, and path planning with greater efficiency. They are specifically designed to accelerate deep learning workloads, and have dedicated hardware, like Tensor Cores, that significantly speed up matrix multiplications and convolutions, which are core operations in deep neural networks.

While GPUs are notorious for their high power consumption, they play a vital role in enabling the real-time perception, planning, and control capabilities required for safe and efficient autonomous driving.

FPGAs (Field Programmable Gate Arrays)

Field Programmable Gate Arrays (FPGAs) are integrated circuits that can be reconfigured after manufacturing to perform specific tasks. They have become increasingly important in the development of autonomous vehicles (AVs) due to their unique capabilities.

AVs rely on a multitude of sensors like cameras, lidar, radar, and ultrasonic sensors to perceive their environment. FPGAs excel at processing the massive amounts of raw data from these sensors in real-time, enabling the vehicle to understand its surroundings accurately and quickly. They can perform complex algorithms like image processing, object detection, and sensor fusion efficiently, which is crucial for safe and reliable autonomous driving.

FPGAs are also ideal for real-time decision-making in AVs. They can rapidly process sensor data, analyze the environment, and make split-second decisions on tasks like path planning, obstacle avoidance, and trajectory control. This real-time responsiveness is essential for ensuring the safety and efficiency of autonomous driving.

The high parallel architecture of FPGAs and their low latency means they can perform multiple operations simultaneously, and also process data and produce results very quickly. This is critical for electric AVs, as delays in processing can lead to accidents.

ASICs (Application-Specific Integrated Circuits)

ASICs are custom-designed chips optimized for specific tasks, potentially offering superior performance and energy efficiency compared to general-purpose processors.

ASICs Use Cases

Sensor Processing

LiDAR (Light Detection and Ranging): ASICs are used to process the vast amount of data generated by LiDAR sensors, which create 3D maps of the surroundings. This requires real-time analysis of distance, intensity, and reflectivity data to identify and track objects.

Radar: ASICs help in signal processing, target detection, and classification of radar data. They enable accurate object detection in various weather and lighting conditions.

Cameras: ASICs are used for image processing, object recognition, lane detection, and traffic sign recognition. They contribute to the overall perception capabilities of the autonomous vehicle.

AI Acceleration

Deep Learning: ASICs are designed to accelerate specific AI workloads, particularly deep neural networks used in object detection, classification, and decision-making. They provide the computational power required for real-time processing of complex algorithms.

Inference: ASICs are optimized for inference, which is the process of applying a trained AI model to new data. They enable fast and efficient decision-making in autonomous vehicles.

Functional Safety

Redundancy: ASICs can be used to implement redundant safety systems, providing backup functionality in case of a primary system failure.

Fault Tolerance: ASICs can be designed to tolerate certain faults and errors, ensuring the safety and reliability of critical functions in the autonomous vehicle.

ASICs are essential components of autonomous vehicles. They provide the necessary processing power, efficiency, and safety features.

You May Also Like:

- 18 Key Components of Electric Vehicles (A Complete Teardown)

- Case Study: Is South Carolina Ready for Autonomous Vehicles?

Control Systems

Controller Area Network (CAN)

Controller Area Network (CAN) is a robust and reliable communication protocol designed for real-time control applications in autonomous vehicles. It’s the robocar’s internal communication network, a message-based system where different electronic control units (ECUs) within a vehicle can exchange data. Think of it as a nervous system allowing various components of the car to talk to each other.

CAN helps in collecting, synchronizing, and distributing massive amount of sensor data to the central processing units for interpretation and decision-making. They also serve as the communication pathway for sending control commands to actuators such as the steering system, braking system, and throttle, ensuring precise and coordinated movements.

How CAN Works in AVs

1. Nodes: Each ECU connected to the CAN bus is considered a node. These nodes can be sensors, actuators, or other processing units.

2. Messages: Data is transmitted in the form of messages. Each message has an identifier that indicates the type of data and its priority.

3. Bus: The CAN bus is a shared communication medium. All nodes can listen to messages on the bus, but only the intended recipient node processes the relevant information.

CAN is designed to be robust against electrical interference and errors, making it a reliable choice for safety-critical applications.

Motion Control

Motion control is the bridge between path planning and the actual movement of an autonomous vehicle (AV). It involves translating the planned trajectory into precise commands for the vehicle’s actuators (throttle, brakes, steering) to execute. In essence, motion control is the “hands and feet” of the AV, responsible for making the planned path a reality on the road. Common algorithms used for motion control are PID controllers (Proportional-Integral-Derivative) and Model Predictive Control (MPC), which help in maintaining smooth and precise vehicle movements.

Actuators

Actuators are the devices that execute control commands. In simple terms, they translate the instructions from the vehicle’s path planning and control systems into real-world movements.

Actuators include electric motors for steering, hydraulic systems for braking, and other mechanisms that ensure the vehicle responds promptly and accurately to control signals.

Actuators are like the muscles of the autonomous vehicle, turning the brain’s decisions into physical actions.

Actuator Use Cases

Acceleration and Braking: Actuators control the engine or motor to speed up, slow down, or come to a complete stop.

Steering: They adjust the angle of the wheels to navigate turns and curves.

Gear Shifting: In vehicles with traditional transmissions, actuators shift gears to optimize performance and efficiency.

Signaling: They activate turn signals, brake lights, and hazard lights to communicate with other road users.

The precision and reliability of actuators are critical for the safety and performance of AVs.

Supporting Systems and Infrastructure

V2X Communication (Vehicle-to-Everything)

V2X communication allows the electric AV interact with external entities like other vehicles, infrastructure, and even pedestrians. It’s like giving AVs a sixth sense, allowing them to communicate with everything around them – other vehicles, traffic lights, road signs, and even pedestrians’ smartphones. This exchange of information extends the AV’s perception beyond its onboard sensors, allowing it to make more informed decisions and navigate complex scenarios more safely.

V2X Communication Use Cases

Extended Perception: V2X communication provides AVs with a 360-degree awareness of their surroundings, overcoming the limitations of onboard sensors like cameras, lidar, and radar. It allows AVs to “see” beyond line-of-sight, detect hidden obstacles, and anticipate the actions of other road users.

Cooperative Maneuvers: V2X enables AVs to coordinate their actions with other vehicles, facilitating maneuvers like lane merging, intersection crossing, and platooning. This coordination can improve traffic flow, reduce congestion, and enhance overall safety.

Enhanced Safety: By sharing information about potential hazards, road conditions, and their own intentions, AVs equipped with V2X can proactively avoid collisions, mitigate accidents, and respond to emergencies more effectively.

Traffic Optimization: V2X data can be used to optimize traffic flow by adjusting signal timings, routing vehicles around congestion, and providing real-time traffic information to drivers. This can lead to reduced travel times, lower fuel consumption, and improved air quality. Technologies such as DSRC (Dedicated Short-Range Communications) and C-V2X (Cellular Vehicle-to-Everything) facilitate traffic management, collision avoidance, and enhanced navigation, creating a more connected and intelligent transportation ecosystem.

V2X communication involves the exchange of sensitive data, and this has raised concerns about cybersecurity threats, data privacy, and potential misuse of information.

AV engineering experts are working on robust security measures essential to protect the integrity and confidentiality of V2X data.

Power Supply Systems

Batteries

Batteries provide electrical power to all electric AV components. They are large battery packs usually located at the bottom of the vehicle, supplying energy for sensors, computers, and actuators. Batteries are not just a power source, but an integral component that affects the AV’s performance, range, safety, and overall functionality.

User Interface (UI) Systems

Human-Machine Interface

Human-Machine interface (HMI)N features in an autonomous vehicle provide information to the passengers and allow interaction with the AV system. They are synonymously known as the display and control features. These features include screens, touch interfaces, and control panels inside the vehicle. They enable users input destinations, monitor the vehicle’s status, and take control if necessary.

These are the major components of autonomous vehicles that make them work. Self-driving technologies are here to stay. As future upgrades are applied to this technology, the components and functionalities of the driverless car can only get better.

Autonomous driving is not without its challenges, but overall, the future is bright. The journey to “Level 5” fully autonomous vehicles is still ongoing. However, the progress made so far is nothing short of astounding. It’s a testament to human ingenuity and our relentless pursuit of innovation.

As we continue to refine and improve driverless technology, we can look forward to a future where transportation is safer, more accessible, and more sustainable.

Expect to see more sophisticated AVs in the United States and across the world in the coming years.

I’m Dr. Brandial Bright, also known as the AVangelist. As a dedicated and passionate researcher in autonomous and electric vehicles (AVs and EVs), my mission is to educate and raise awareness within the automotive industry. As the Founder and Managing Partner of Fifth Level Consulting, I promote the adoption and innovation of advanced vehicle technologies through speaking engagements, consulting, and research as we progress to level 5 fully autonomous vehicles.